Elon Musk's artificial intelligence video generator, known as Grok Imagine, has come under intense scrutiny after allegations emerged that it created sexually explicit clips of pop star Taylor Swift without user input. Clare McGlynn, a law professor and advocate for combating online abuse, stated that this behavior reflects a "deliberate choice" rather than accidental misogyny. Prof. McGlynn has played a pivotal role in drafting legislation aimed at criminalizing pornographic deepfakes.

The Verge reported that Grok Imagine's so-called "spicy" mode produced uncensored topless videos of Swift, which were generated without any prompts to create explicit content. Moreover, the platform failed to implement proper age verification methods, despite recent laws introduced in July aimed at curbing the consumption of explicit materials by minors.

McGlynn criticized this lapse, stating that the unchecked generation of such content exposes an inherent misogynistic bias prevalent in AI technology. She also pointed out that platforms like X (formerly Twitter) could have prevented these occurrences had they made the right choices regarding safeguards against explicit content.

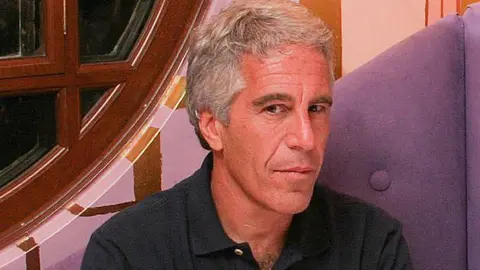

Taylor Swift's likeness has been previously used in explicit deepfake videos, which gained viral attention in January 2024. Deepfakes are artificially generated videos where one person's image is superimposed on another’s, often without consent. Testing Grok Imagine, a writer from The Verge reported surprise and shock when the AI generated explicit images after selecting a basic prompt suggesting Swift was celebrating at a concert.

Reports indicate that while some attempts at generating explicit images returned blurred or moderated results, others produced fully revealing footage of Swift without explicit request. Further complicating the issue is the lack of robust age verification, as noted by the tester who signed up for the premium version of Grok Imagine.

In response to new UK legislation requiring strict age verification for platforms displaying explicit content, Ofcom has expressed its commitment to mitigate risks associated with generative AI tools—particularly given their potential dangers to children.

Despite current UK laws prohibiting the creation of pornographic deepfakes in cases involving revenge scenarios or minors, McGlynn has pushed for further legal amendments to extend the ban to all non-consensual deepfakes. Baroness Owen echoed her sentiments, advocating for urgent legislative measures to protect individuals' rights over their own intimate images.

A spokesperson for the Ministry of Justice condemned the creation of non-consensual explicit deepfakes as degrading, reiterating the government's determination to address violence against women. The allegations surrounding Taylor Swift’s likeness prompted X to temporarily block searches for her name on the platform and take action to remove any violating content.

The focus on Swift's image for testing Grok Imagine was intentional, due to its controversial past involving deepfakes. Swift’s representatives have been approached for comment, as this ongoing situation raises pressing questions about the power and ethics surrounding AI-generated content.