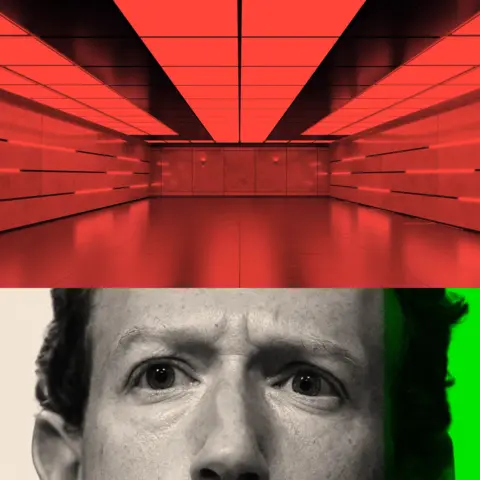

Mark Zuckerberg is said to have started work on Koolau Ranch, his sprawling 1,400-acre compound on the Hawaiian island of Kauai, as far back as 2014. It is set to include a shelter, complete with its own energy and food supplies, though the carpenters and electricians working on the site were banned from talking about it by non-disclosure agreements, according to a report by Wired magazine. A six-foot wall blocked the project from view of a nearby road.

Asked last year if he was creating a doomsday bunker, the Facebook founder gave a flat 'no'. The underground space spanning some 5,000 square feet is, he explained, is 'just like a little shelter, it's like a basement'. However, this hasn’t stopped the speculation around his decision to buy 11 properties in the Crescent Park neighborhood of Palo Alto, which apparently includes a 7,000 square foot underground space. Though his building permits refer to basements, some of his neighbors call it a bunker or a billionaire's bat cave.

Other Silicon Valley billionaires appear to be following suit, busy buying up land with underground spaces potentially suitable for high-end luxury bunkers. Reid Hoffman, the co-founder of LinkedIn, has discussed the concept of 'apocalypse insurance', hinting that many of the wealthy are preparing for a possible crisis, with New Zealand being a favored hideout. This raises the question: are they preparing for potential climate change, pandemics, or war?

The advancement of artificial intelligence (AI) has only added to these existential fears. Ilya Sutskever, the chief scientist at OpenAI, is wary of the implications of developing artificial general intelligence (AGI) and suggested that AI company scientists might need to consider sheltering prior to its release due to unforeseen ramifications.

This sense of urgency and paranoia is echoed by other tech leaders, prompting discussions about the future of AI and the potential catastrophic outcomes tied to it. Concerns over developments that could lead to super-intelligent AI bring a looming threat, suggesting that their initiatives are not just purely precautionary measures but a genuine reflection of fears within the tech community.

Moreover, government perspectives reveal a split with some protecting against these threats while others seem resistant to regulation, highlighting a divide in understanding the risks posed by advanced technologies. Amidst this backdrop of tech moguls seeking refuge from anticipated calamities, the implications for everyday people remain unclear, with some experts dismissing the fears as alarmist, questioning whether the discussions around AGI might distract from the immediate advancements needed in AI ethics and safety.